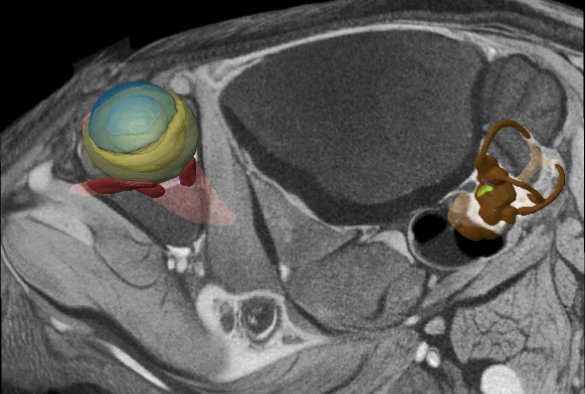

Computational reconstruction of vestibular system

The University of Liverpool has contributed to new research that helps to explain how specialised retinal cells help stabilise vision by perceiving how their owner is moving.

The finding is part of a broader discovery, made in the retinas of mice that may help explain how mammals keep their vision stable and keep their balance as they move.

Sensing movement in space

The brain needs a way to sense how it is moving in space. Two key systems at the brain’s disposal are the motion-sensing vestibular system in the ears, and vision — specifically, how the image of the world is moving across the retina. The brain integrates information from these two systems, or uses one if the other isn’t available (e.g., in darkness or when motion is seen but not felt, as in an airplane at constant cruising speed).

From observations of thousands of retinal neurons, a research team led by Brown University found that direction-selective ganglion cells (DSGCs) become activated when they sense their particular component of the radial optical flow through the mouse’s vision. Arranged in ensembles on the retina, they collectively recognise the radiating optical flow resulting from four distinct motions: the mouse advancing, retreating, rising or falling. The reports from each ensemble, as well as from those in the other eye, provide enough visual information to represent any sort of motion through space, even when they are combinations of directions like forward and up.

The team monitored 2,400 cells all over the retina via two methods. Most cells were engineered to glow whenever their level of calcium rose in response to visual input (e.g., upon “seeing” their preferred direction of optical flow). The researchers supplemented those observations by making direct electrical recordings of neural activity in places where the fluorescence didn’t take hold. The key was to cover as much retinal area as possible.

As the researchers moved stimuli around for the retina to behold, they saw that different types of DSGCs all over the retina worked in ensembles to preferentially detect radial optical flows consistent with moving up or down or forward or back.

But if all the cells are tuned to measure the animal’s translation forward, backward, up or down, how could the system also understand rotation? The team turned to computer modeling based on their insights in the mouse, which gave them a prediction and a hypothesis: the brain could use a simple trick to notice the specific mismatch between the optical flow during rotation and the optical flow of translation.

Novel techniques

Researchers in Liverpool used advanced computational methods and novel imaging techniques to investigate the evolution of rodent jaws, to capture the geometry of the inner ear in relation to the eye and head. These data were then analysed in collaboration with the team at Brown University.

Dr Nathan Jeffery, University of Liverpool, said: “We have always known that the eye and inner ear provide information on movement and that these do not always tally – ever recall sitting on a stationary train whilst watching the train next door leave and getting a sense of motion. This is the eye signal trumping the inner ear signal. Until this paper, however, we have known very little about how these visual cues are encoded and how this might relate to the cues generated by the inner ear. Turns out that visual encoding starts in the eye with groups of cells that are sensitive to particular directions of movement and although linked closely to the function of the inner ear, the encoding appears to be distinct.”

Looking forward

Notably, mice are different than people in this context because their eyes are on the sides of their head, rather than the front. Also, the researchers acknowledge, no one has yet confirmed that DSGCs are in the eyes of humans and other primates. But they strongly suspects they are.

“There is very good reason to believe they are in primates because the function of image stabilization works in us very much the same way that it works not only in mice, but also in frogs and turtles and birds and flies,” senior author Professor David Berson, Brown University, said. “This is a highly adaptive function that must have evolved early and has been retained. Despite all the ways animals move — swimming, flying, walking — image stabilization turns out to be very valuable.”

The National Eye Institute (R01EY12793), the National Science Foundation (Grant: DMS-1148284), the Alcon Research Institute, the Sidney A. Fox and Dorothea Doctors Fox Postdoctoral Fellowship in Ophthalmology, and the Banting Postdoctoral Fellowship of Canada funded the research.

The paper ‘A retinal code for motion along the gravitational and body axes’ is published in Nature [doi:10.1038/nature22818]