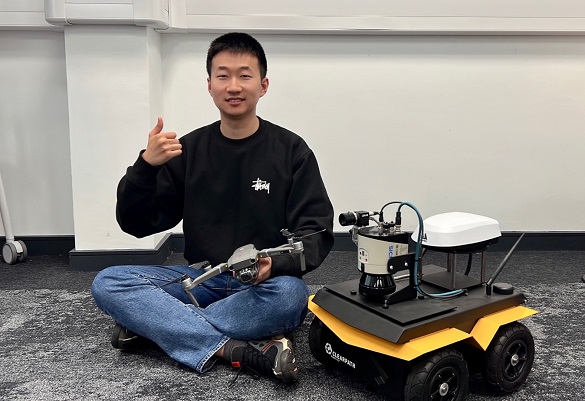

As we celebrate the official opening of the Digital Innovation Facility (DIF), Wei Huang, a fourth year PhD student tells us about the research he undertakes in the Trustworthy Autonomous Cyber-Physical Systems Laboratory, one of the six state of the art labs located in the DIF:

The past few years have witnessed tremendous progress on Artificial Intelligence. The great achievement in human-level intelligence makes it possible that machine learning (ML) models can be embedding into autonomous system (so called Learning-enabled autonomous system) to finish the safety critical applications, such robot assisted surgery and self-driving cars.

Unfortunately, it has been shown that ML components are suffering from lack of robustness, since the adversarial examples can be easily crafted. The small, maybe human imperceptible, perturbations on the inputs can totally change the final prediction results, and thus make the model unreliable.

Apart from the robustness, some security problems also raise people’s concern, e.g., backdoor. Since the ML techniques become prevalent, the requirement of hardware, time, and data to train a machine learning model also increases dramatically. Under this scenario, machine-learning-as-a-service (MLaaS) becomes an increasingly popular business model. However, the training process in MLaaS is not transparent and may embed with backdoor, i.e., hidden malicious functionalities, into the model.

Another challenge to wide adoption of ML is their perceived lack of transparency. The black-box nature of ML models means they do not provide human users with direct explanations of their predictions. This led to growing interest in Explainable AI (XAI) — a research field that aims at improving the trustworthiness and transparency of AI.

My Research

I am a member of Trustworthy Autonomous Cyber Physical Systems (TACPS) Lab, which is directed by Professor Xiaowei Huang and Dr Xingyu Zhao.

TCAPS is located on the ground floor of the Digital Innovation Facility and the DIF provides the high-performance GPU server for Machine Learning and includes the large lab space for developing robotics system. I benefit from this and I can successfully do the research on verification and validation of ML components in Learning-enabled autonomous systems.

In the early study, I focus on developing test algorithm for ML models. To be specific, I developed coverage-guided testing methods, the widely used software testing technique, for Recurrent Neural Networks (RNNs).

The temporal semantics are considered for the design of new coverage metrics to systematically explore the internal behaviors of RNNs. More diverse and meaningful adversarial examples are uncovered. In another project, I study the backdoor attack and defense in ensemble tree classifiers. Malicious knowledge can be easily and stealthily embedded into the ensemble tree by proposed algorithms.

The extraction of such knowledge by enumeration leads to nondeterministic polynomial time (NP) computation. There is a complexity gap (P vs. NP) between attack and defense when working with ensemble tree. To consider the XAI for ML, I develop more robust and consistent explainer for ML models. By using Bayesian technique, prior knowledge along with observations are fusioned to give more precise and robust explanation for ML models’ prediction results.

Besides the testing of ML component, I also concern about the impact of ML components’ failure on operations of complex robotics system.

I study a learning-abled state estimation system for robotics, which use neural network to process sensory input. I verify the robustness and resilience of such system when neural network produces the adversarial input for localization.

In another project, I develop a novel model-agnostic Reliability Assessment Model (RAM) for ML classifiers that utilises the operational profile and robustness verification evidence. RAM is further applied to the Autonomous Under Water Vehicles in Simulation to demonstrate the effectiveness.

Alongside my research, I actively participated on the competitions and pursed the opportunity to collaborate with other academic partners.

My project ‘Assessing the Reliability of Machine Learning Model Through Robustness Evaluation and Operational Profiles’ won the ‘most original approach’ award at Siemens Mobility’s Artificial Intelligence Dependability Assessment – Student Challenge (AI-DA-SC), where more than 30 teams of brilliant students from 15 countries developed AI Models from the perspective of demanding safety requirements.

I cooperated with the researcher from Edinburgh Center for Robotics to develop verification methods for under water vehicle systems. Currently, I am working with partners from the Université Grenoble Alpes to design novel runtime monitors for ML classifiers.

Future Plans

I am currently in the mid-term of my fourth PhD study. In the next few months, I will write my thesis and expect to pass through the viva before the end of 2022. Afterwards, I want to find a postdoctoral job to continue my research on SafeAI.