Dr Marie McIntyre is an epidemiologist at the University’s Institute of Infection and Global Health. In a public debate, as part of the 2015 Battle of Ideas festival in London, she asked: “Can big data save the world?”

“Big data approaches are of huge importance for both human and veterinary public health, which use analysis to identify, treat and prevent disease at the population level. Our work is very often about bringing together and analyzing multiple data sources; be they clinical health records, disease surveillance data, climate data or phone use data. Big data approaches are about creating structure and preparing data and ideas for projects properly, to help answer research questions. They involve lots of planning and consideration of biases and weaknesses in data.

Mapping disease

I currently work on several big data projects. The idea for one – the Enhanced Infectious Diseases (EID2) database – was based on some heavily used existing research which is, essentially, a list of human pathogens. It had taken several people many years to read the scientific literature and other sources to compile this list. By using open access big data sources e.g. taxonomic trees, DNA/RNA sequences and their metadata describing where, when and from which host they came, we’ve been able to replicate the original pathogen list and add to it. We’ve achieved a more complete end product, which can be regularly updated with new evidence, in a much quicker time frame.

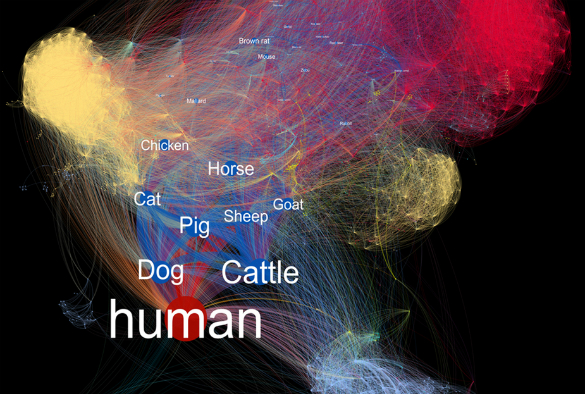

By fully mapping the relationships between human and animal diseases, their hosts, pathogens and transmission routes we can trace the history of diseases, predict the effects of climate change and other factors like demography and vegetation on pathogens, learn what disease risks exist in a population or region, and how best to tackle them, and categorize complex relationships between the carriers and hosts of numerous pathogens. Only 4% of clinically important human diseases are currently mapped and even less is known about animal infections; we can improve on this in the future. Additionally, what we’ve created is open-access, so anyone with an idea can register and use it.

Another big data project I work on – the Integrate project – is about using different sorts of health records to improve surveillance for gastrointestinal (GI) disease. We’re using anonymous data from NHS 111 and vet’s surgeries to find hotspots of infection, then getting patients reporting GI symptoms to send us samples which we investigate using improved laboratory techniques so that we can identify what’s causing the illness and stop outbreaks faster. This is a collaboration between researchers and front-line public health staff and is legally bound by various ethics and confidentiality agreements. It aims to evolve current GI disease surveillance and is an implementation of improvements in various aspects of disease care – these things should be connected together once we have improved technologies, but it’s actually really difficult to do.

Mobile phone data in disaster relief

The Battle of Ideas festival theme mentioned projects that utilise mobile phone data from disaster relief situations. There are lots of mobile phone projects being developed but this field is really new.

A retrospective analysis of the 2010 Haiti earthquake, carried out by staff affiliated to the Flowminder Foundation, showed that it’s possible to accurately estimate the number of displaced people, when major population movements occur, and also where people travelled to using mobile phone records. These estimates were much more accurate than those cobbled together just after the earthquake; these studies are necessary to learn how well techniques work, so they can be used ‘live’ in future disasters.

In another study from Global Pulse, anonymized call detail records from mobile phones were compared before, during and after a flooding event in Mexico, and combined with satellite images, rainfall and demographic data. Analysis of records provided a baseline understanding of how affected populations behave in such an emergency, including communication and movement, and also how well disaster recovery during the event could be recorded. The research highlighted how well the call data represented the local population structure and also the flooding impact, but also indicated that Civil Protection Warnings weren’t working well and should be integrated with newer approaches.

Issues to consider

The outputs of these examples all have potentially important impacts. However, there are issues about who owns data and how complete or biased sources are – not everyone owns a mobile phone or uses only one sim card.

More widely, care also has to be taken in terms of individual anonymity and personal details such as health status, sex and age. These issues are heavily governed by ethics and confidentiality agreements in the UK. Policing of data use has to be a must, with governments or appropriate civil partners, the individuals affected and the technology industry and private companies involved.

Big data approaches can’t solve every problem, particularly in a crisis. Their strength is in being used in tandem with other disciplines, just like in public health, where we need clinicians as well as laboratory staff and number crunchers – the epidemiologists and public health specialists – to help solve problems.”

This is an edited version of Marie’s opening speech from the Festival of Ideas: Can big data save the world? debate which took place on Saturday, 17 October 2015 at the Barbican in London.